Real-time Acoustic Room Auralization

Earlier this year, an Acoustician friend from Chalmers days visited, leading to many discussions on real-time Auralization of Acoustic spaces which was her thesis and current work.

auralization

Auralization is..

the process of rendering audible, by physical or mathematical modeling, the sound field of a source in a space, in such a way as to simulate the binaural listening experience at a given position in the modeled space.

The ability to recreate listening environments has always been one of the aims of acoustics and audio engineering. The goal has been not only to recreate the sensation of the speech or music, but also allow the recreation of the aural impression of the acoustic characteristics of a space, be it outdoors or indoors.

(source)

In short, the idea is to simulate an aural experience of a space (a room, a church, a hallway, a garden, etc). It could be as simple as recording that space and then playing it back to a person wearing headphones, or as complicated as having a multi-loudspeaker setup in anaechoic chambers.

My Acoustician friend though, was looking at a newer idea of having this done real-time, such that you can interact with this simulated aural experience, by speaking. And you will hear the result of your voice interacting with this simulated space. Such interactivity has been shown to make the simulation feel more realistic.

browsers and webaudio

My friend’s setup to do real-time auralization was complex, having deal with echos and feedback. I wondered if this could be acheived, albeit with much reduced functionality, on modern mobile platforms.

I took a stab at it on iOS since CoreAudio is pretty decent at doing real-time processing. It seemed promising, but I was lazy to deal with the complexity of making a decent UI for it.

That’s when I remembered that Chrome had announced that they were adding a bridge between WebAudio and getUserMedia/Stream. This would allow audio from microphone (getUserMedia) to be piped into WebAudio. WebAudio is a low latency real-time audio processing API in the browser. So theoratically, I would be able to do some kind of real-time Auralization in the browser.

Also, being in the browser meant I could publish it easily and skinning it with a nice UI would only require me to annoy/impress some friends.

auralizr

After a weekend of hacking out the internals, creating a nice UI and interactions, and finding good source material, we put out a real-time auralization demo called Auralizr.

The demo showcases the flexibility of modern Web Platform as well as show that real-time auralization can be implemented (to a certain extent) on the Web.

Since all the audio processing is done in the browser, this could happily be hosted on Github Pages.

how does it work?

The getUserMedia/Stream API allows browsers to access audio/video streams from the underlying OS. Of course, the browser will ask for user permission to use the microphone.

Currently getUserMedia is only supported by Chrome and Firefox.

The navigator.getUserMedia call with the constraints {audio:true} returns a MediaStream

Here is a quick demo of getting some audio from your microphone and adding it to a volume meter.

Don’t forget to allow your browser to use your microphone

(source)

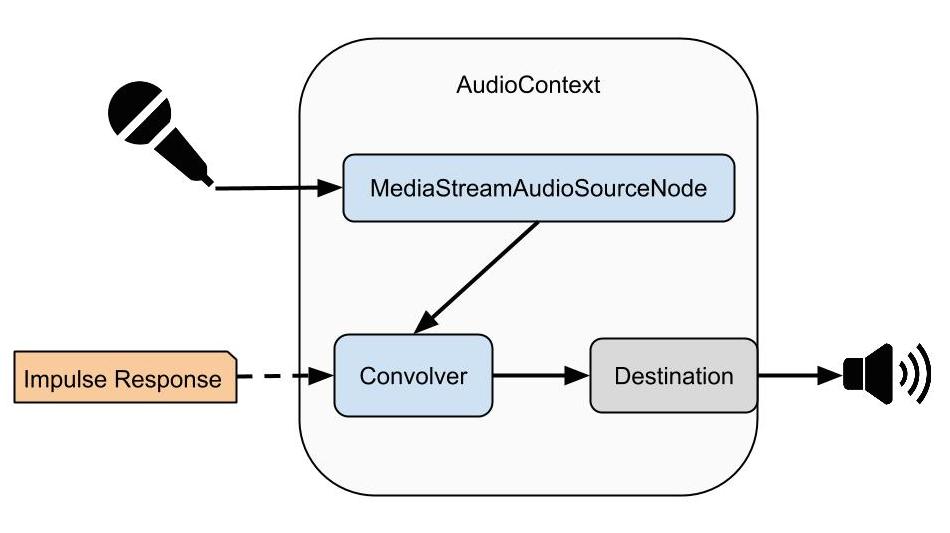

The WebAudio API has a audioContext.createMediaStreamSource method which wraps this MediaStream and exposes a standard Web Audio AudioNode called MediaStreamAudioSourceNode.

navigator.getUserMedia( {audio:true}, function (stream) {

mediaStreamSource = audioContext.createMediaStreamSource( stream );

} , function(){

console.log("Error getting audio stream from getUserMedia");

});Once the audio from the microphone is in WebAudio, it’s a simple task of passing it through a ConvolverNode to the output.

convolver = audioContext.createConvolver();

mediaStreamSource.connect(convolver);

convolver.connect(audioContext.desitination);Here is a quick overview of the connections.

convolution and impulse responses

The real magic happens in the ConvolverNode. Convolution allows a signal to undergo elaborate processing based on model of a given system. The model of this system (an impulse response) is used to define the how the system affects the signal.

Here is a nice gif which tries to explain the math behind Convolution.

(source)

These impulse responses can be considered as mathematical models of the system, and affect a given signal similarly as the system would have affect it. So given the impulse responses of the aural spaces we want to recreate(for eg, a hall, church, etc) you could easily convolve that with the audio from the microphone and out comes the audio as if you were speaking in the space.

I created a simple demo that showcases how convolution can affect an given audio. The original sound of a gunshot played in a loop. If you pass it through a Convolver with various impulse responses, it sounds as if you’re listening to the gunshot sound in a different space.

Use headphones while listening to these sounds. Also, sounds are loud.

For the Auralizr demo the users would be listening on headphones, so I needed binaural impulses responses of various types of spaces. I found OpenAirLib which has a ton of good quality impulse response recordings licensed under Creative Commons. The responses are avilable as wav files which can be loaded and decoded using WebAudio API. The returned AudioBuffer can be used as an impulse response in a Convolver Node.

Putting everything together we created Auralizr. Auralizr is open source and you can look at how everything was hooked up on GitHub.

Note: There seems to be a latency issue on Firefox (Nightly 32.0a1) and even with Chrome (35.0.1961) on Android with getUserMedia-WebAudio bridge. Hence the simulation of being in another space is lost. I am working on an experiment to measure this delay.